Updated: Where the House and Senate Are on Internal AI

Updated September 27, 2024

When it comes to adopting modern technology, “government innovation” has long been at risk of being an oxymoron. In the case of adapting to the emergence of AI however, Congress tells a different story. While debates continue across Capitol Hill on approaches to regulation of these emerging technologies, institutional offices within the Senate and House of Representatives have taken steps to explore the effects of this paradigm shift on internal Congressional operations. For the first time in recent memory, Congress isn’t “behind the times,” but rather has proactively taken steps to set policy guidance, fostering institutional agility in the face of AI’s exciting potentials, and continues to update policies to allow for innovation.

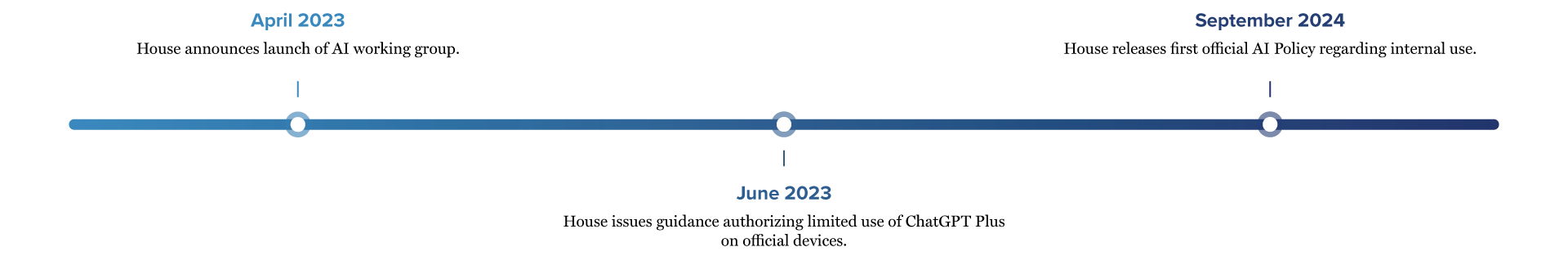

House Guidance and Initiatives Over Time

In April 2023 the House Digital Service (HDS), an innovation hub within the technology department of the House Chief Administrative Officer (CAO), announced the launch of an institution-hosted AI working group. This pilot project provided 40 ChatGPT licenses to a bipartisan group of staff to create an information stream of use-case examples and user experience feedback to aid the House’s understanding of how GenAI could be adapted to Hill workflows.

Following the launch, in June 2023 the CAO’s House Information Resources (HIR) provided House-wide guidance regarding authorized use of GenAI on House-issued devices. This policy:

Authorized the use of ChatGPT Plus, the paid version, as the only approved AI Large Language Model (LLM) for use on official devices due to its advanced privacy features

Limited authorized use of ChatGPT to research and evaluation tasks

Prohibited staff from fully integrating the LLM into regular operations

Required staff use the LLM with privacy settings enabled and only with non-sensitive data

Current House Guidance: the House AI Policy

In September 2024, the CAO announced the establishment of the House’s first AI policy (HITPOL 8) via e-Dear Colleague. The policy, which is posted on HouseNet and available to Congressional staff behind the House’s firewall, outlines approved use cases of AI technology as well as includes updated guardrails and principles to guide Members and staff in their further exploration and adoption of this technology. The policy also establishes a process by which Member offices can submit new AI tools for review and approval through the My Services Request Portal on HouseNet. CAO and the Committee on House Administration will review the policy annually and issue updated guidance as deemed necessary.

Senate Guidance and Initiatives

Similar to the approach taken by the House, the Senate Sergeant at Arms (SAA) established an institution-hosted working group to encourage and explore internal use of GenAI and share best practices. As the working group has progressed, the SAA has released a number of resources on its Generative AI project page, including training resources.

In December 2023, the SAA Chief Information Officer (CIO) announced official internal guidance for Senators and staff, authorizing use of a selection of “conversational AI services.” The policy establishes the following guidelines:

Approved Tools

After thorough review, the SAA CIO approves the use of OpenAI’s ChatGPT, Google’s Bard AI, and Microsoft’s Bing AI Chat. Use of these tools can only be undertaken with required compensating controls enabled as outlined in the SAA’s risk assessment reports linked on the guidance document.

Restrictions on Use

Use of the approved GenAI tools can only be used for research and evaluation tasks.

Only non-sensitive data can be used when interacting with these tools.

Noted Concerns and Additional Guidelines

The SAA CIO’s policy includes a collection of additional guidelines to aid Senators and staff in mitigating risks, including:

Encouraging individuals to approach these tools with similar caution taken when utilizing search engines, reemphasizing privacy and data security concerns

Advising that individuals should assume all information put into an AI tool could become public and remain cognizant that a model may be able to glean information from a series of prompts

Emphasizing that all information provided by these tools should be verified and that human review of all generated products and content remains essential

The House’s and Senate’s proactive, transparent approaches to setting institutional guardrails for use of this emerging technology is creating an environment in which innovation can safely begin. It also acknowledges the need for staff and Members who may be considering future regulatory approaches to have an opportunity to work with and understand these new tools. With clear guidance authorizing the use of LLMs, staff can experiment with confidence and assurance that they are operating within the boundaries of authorized activities, and as that policy expands will be able to adjust accordingly.

As Congress’ institutional approach to AI evolves, updates will be made here to provide an across-the-Hill perspective of what is allowed, what isn’t, and additional policy changes of note.