Key Terms and Examples of Legislative AI Adoption Over the Decades

An October 30, 2023 executive order issued by US President Joe Biden on the “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence” defines AI as:

a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments. Artificial intelligence systems use machine- and human-based inputs to perceive real and virtual environments; abstract such perceptions into models through analysis in an automated manner; and use model inference to formulate options for information or action.¹ (15 U.S.C. 9401(3))

The Organization for Economic Cooperation and Development (OECD) recently updated its definition of "artificial intelligence" (AI):

An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that [can] influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.²

The term includes a spectrum of automated technologies that include but are not limited to:

Predictive Modeling: Utilizing statistical techniques to predict future outcomes based on historical data, aiding in risk assessment, marketing, and other forecasting needs.³

Machine Learning (ML): Systems with the ability to learn from and improve with experience, used in predictive modeling, predictive text, data analysis, and statistical analysis.

Natural Language Processing (NLP): Analyzing and understanding human language to facilitate interactions between computers and human language, enabling applications such as text analysis, translation, and chatbots.

Computer Vision: Interpreting and making decisions based on visual data, used in facial recognition, image and video analysis, and object detection.⁴

Speech Recognition: Translating spoken language into written text, aiding in transcription services, voice-activated systems, and customer service applications.

For the past several decades, legislative bodies around the globe have begun to incorporate these advanced tools into their operations to varying degrees, exploring the technologies’ potential to enhance efficiency, accuracy, and engagement in the legislative process.⁵ The following examples demonstrate the use of forms of AI in legislative environments over time.

Predictive Modeling

Predictive modeling is the use of algorithms to forecast future outcomes by discerning meaningful patterns in data. This technique gained traction among actuaries, marketers, and risk analysts during the 1990s and 2000s. In the legislative realm, predictive modeling is used for assessing the potential impact of proposed bills on federal finances — sometimes referred to as the “fiscal note.”

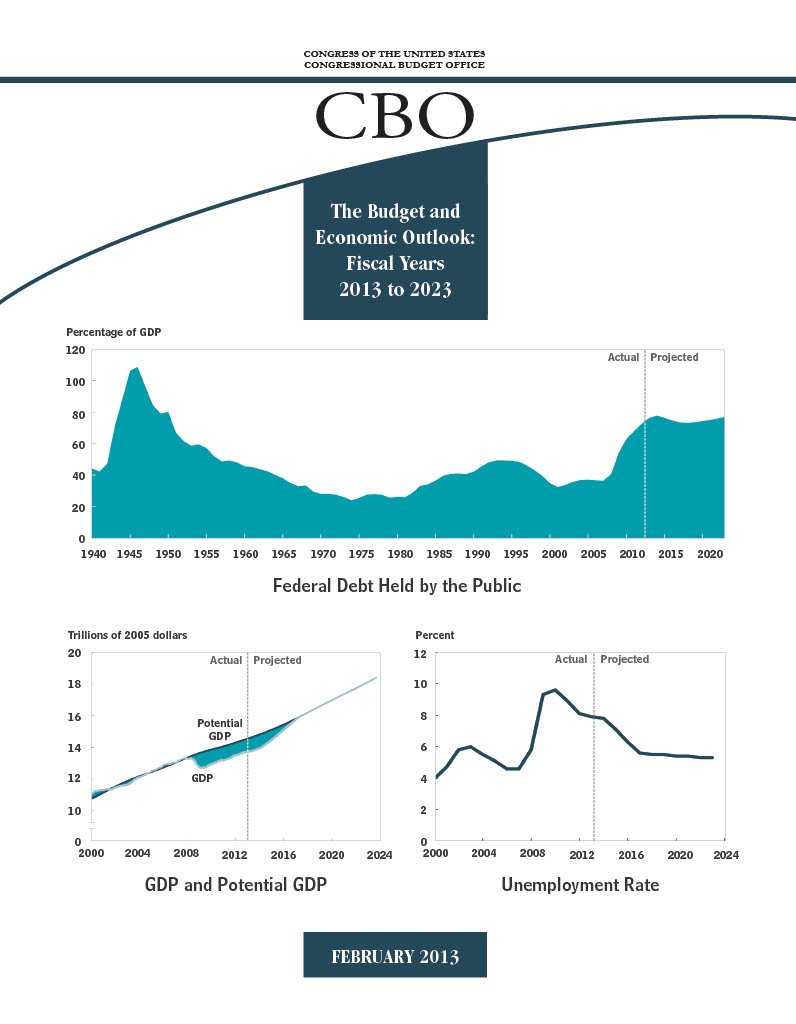

In the US federal context, notable instances include the models utilized by the Congressional Budget Office (CBO) and the Joint Committee on Taxation (JCT).

The CBO provides budget and economic information to Congress, including, for significant legislative proposals, a cost-benefit “score” demonstrating how the proposed policy would impact the federal deficit.

To accomplish this, CBO employs a suite of automated technology or AI models, one of which is the Policy Growth Model (PGM), which analyzes the interplay between economic growth and the federal budget. It factors in the effects of labor force changes, productive capital alterations, and total factor productivity on US economic activity, particularly the gross domestic product (GDP). Within this predictive model, CBO staff can estimate how variables such as hours worked, savings, investment in capital, and GDP react to economic shocks and fiscal policy shifts, including alterations in federal tax rates, spending, and deficits providing legislatures valuable insight into the potential effects of proposed policies.⁶

The JCT provides the US Congress with tax-related analysis and revenue projections to score how a change in tax policy would impact revenue collected. Policy models maintained by JCT include “the Individual Model, the Corporate Model, and the Estate and Gift Model.”⁷

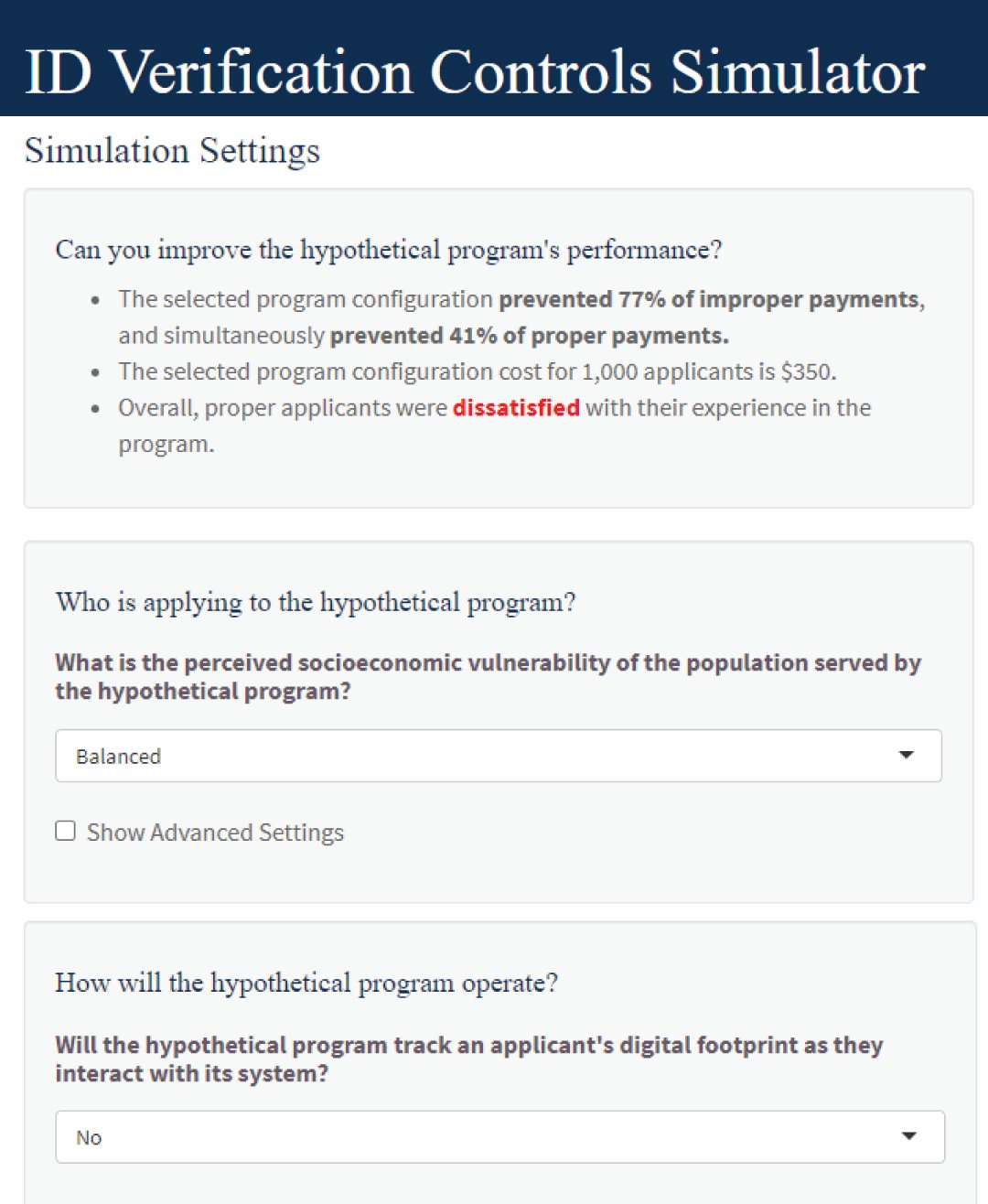

In a recent example, the US Government Accountability Office’s (GAO) Innovation Lab showcased a simulation tool in 2022 termed the “ID Verification Controls Simulator,”⁸ demonstrating how predictive modeling could leverage ML-trained simulations to assist policymakers in navigating policy tradeoffs. This simulator enables agencies and their associates to model hypothetical policy and design choices, exploring the ramifications of varying verification approaches on program access, fraud, and participant satisfaction.

So, for example, if a policymaker asked the program to simulate a policy design with zero tolerance for fraud, then other characteristics — such as ease-of-use, user satisfaction, and accessibility — were adversely impacted. Similarly, a program designed solely for ease-of-use and accessibility might result in an unacceptably high rate of fraud. The pilot simulator helps to illustrate how AI-enabled dashboards can help policymakers understand the tradeoffs inherent in program design and how to balance them.

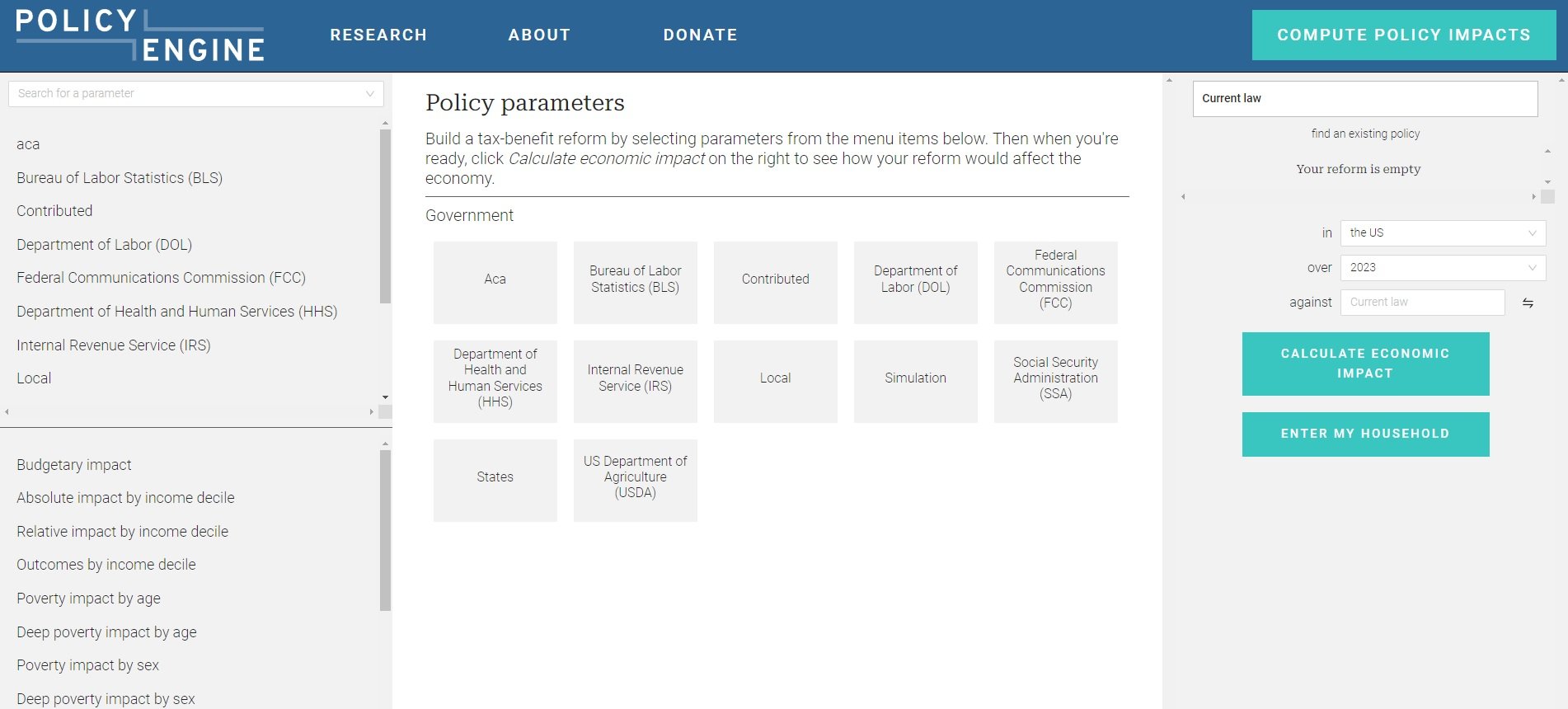

GAO’s policy simulator tool provides a glimpse of the kinds of complex policy interactions that predictive modeling — combined with other AI tools — could enable for legislatures in the near future. One open source project outside government is demonstrating how this policy modeling capability could help policymakers — and the public — better understand even broader potential impacts of proposed policy changes. PolicyEngine, which is currently available in the US and UK, allows users to propose policy changes and calculate the impact both on their own household and the economy overall.⁹

Both the GAO ID verification simulator and PolicyEngine demonstrate the emerging technical feasibility of giving lawmakers and staff greater autonomy to explore interventions and their potential effects in a format that is much more interactive and useful than a pdf report or chart. With institutional investment in interactive modeling tools lawmakers could test their policy ideas with instantaneous insights well beyond the narrow frame of cost-benefit or revenue analysis.

Machine Learning (ML)

Machine learning (ML) systems use algorithms that can learn and improve themselves by ingesting large volumes of data without requiring explicit programming. These algorithms detect patterns and draw inferences based on the data, and can be “trained” in several ways:

Supervised Machine Learning: Trained on labeled data to map inputs to outputs — “This means this.”

Unsupervised Machine Learning: Seeks patterns in unlabeled data — “Here’s some data. Go learn.”

Reinforcement Learning: Learns via feedback from interactions — “You got this right/wrong.”

Initially gaining traction in the 1980s, ML forms the backbone of many contemporary AI applications.

Since 2018, the Library of Congress (LC) has been actively researching and implementing ML technologies, including the creation of machine-readable text from digitized documents using Optical Character Recognition (OCR), generating standardized catalog records, extracting data from historical copyright records, and parsing legislative data.¹⁰ However, the Library is proceeding cautiously:

…at its simplest, machine learning (ML) and artificial intelligence (AI) tools haven’t demonstrated that they’re able to meet our very high standards for responsible stewardship of information in most cases, without significant human intervention.

As the Library of Congress, we have a responsibility to the American public. As shepherds of the largest library in the world, we also have a responsibility to all curious people, and especially to those whose stories we hold. We know how important it is to get information right, and we won’t implement technology that automates our work without thorough vetting to make sure we aren’t compromising our trustworthiness or the authenticity of the information we offer.¹¹

The Library will soon release a draft framework for AI planning with an opportunity for public input on its plans.

A November 2022 report by the RAND Corporation highlighted machine learning's potential to bolster public policy effectiveness. “By leveraging the increasing amount of available information, ML has the potential to more accurately predict policy outcomes, thereby reducing the risks of unintended consequences. … Furthermore, ML algorithms can automate data processes to offer more-rapid predictions that increase the possibility of early intervention.”¹²

Natural Language Processing (NLP)

Natural Language Processing enables computers to understand, interpret, and generate human language in a way that is both meaningful and useful — translating language that humans can understand to the “ones and zeros” that can be processed by a computer, and vice-versa. NLP is used in applications such as chatbots, translation services, sentiment analysis, and voice recognition systems.

Since 2017, the US House of Representatives has invested substantial resources in an NLP-based tool named the Comparative Print Suite (CPS). A collaborative effort between the House Office of the Clerk and the House Office of Legislative Counsel, the CPS provides staff with a robust legislative comparison tool that empowers them with the ability to visualize changes in existing law through line edits, amendments, or newly drafted legislative proposals.¹³

Screen capture of the US Office of the House Clerk's Comparative Print Suite interface (2023)

Additional NLP projects in the US Congress include LC Labs’ experiments with legislative bill data to create bill summaries through natural language processing, which could augment the work of the Congressional Research Service and reduce the wait time for these important nonpartisan resources.”¹⁴

GAO’s Innovation Lab has also harnessed NLP tools to enhance its responsiveness to Congressional needs,¹⁵ with tools developed over the past several years that include:

Project Sia: A tool that scrapes Congressional committee websites for hearing notices, compiling a list that enables GAO to notify committee staff about pertinent GAO reports ahead of scheduled hearings or other official business.

Project Titan: A tool aiding GAO staff in pinpointing relevant information within its past work product corpus, demonstrating how NLP can be utilized to increase internal accessibility of an entity’s institutional knowledge.

Project Wordworkr: A drafting tool assisting GAO staff in adhering to the “GAO style” in their work, encompassing nonpartisan language usage.

Although use of these tools is exclusively limited to GAO employees, they demonstrate noteworthy NLP-enabled applications for a legislative context that representative bodies could explore for wide-use application. Several of these initial prototypes have been superseded by newer applications — including GAO’s work on GenAI (discussed later).

Computer Vision

Computer vision facilitates the interpretation and analysis of visual data such as images and videos through pattern recognition techniques. A notable application of this technology is facial recognition, which began gaining widespread adoption around 2010.

Although the application of facial recognition has been limited in the US legislative arena, various implementations are either in use or under consideration globally.¹⁶ For example, the European Parliament has used computer vision to aid in analyzing extensive volumes of archived video content.¹⁷ Some parliaments, like Brazil, are employing facial recognition technologies for identity verification for electronic voting systems.¹⁸

Screen capture compilation of the Brazilian Senate Remote Voting System user interface and user identification sign-in screen (2023)

Speech Recognition

Speech recognition converts spoken language into text. Its consumer adoption surged in the 2010s with the introduction of Siri on Apple's iPhones and Amazon’s Alexa.¹⁹

Recently, US Senator John Fetterman [D, PA] revealed that he began using speech recognition technology following a significant stroke last year.²⁰ The necessity to accommodate Fetterman led the Senate to adopt real-time, closed captioning capabilities, illustrating how needs that arise in response to an individual need can benefit the institution as a whole. While AI-enabled transcription technology is improving rapidly, the Senate is currently opting to have these captions “produced by professional broadcast captioners rather than artificial intelligence in order to improve accuracy.”²¹

As speech recognition technology continues to advance, adoption within legislative bodies will likely increase, making proceedings more accessible and efficient. The technology could potentially serve a broader array of applications, such as real-time transcription of debates, aiding in document preparation, or even facilitating multilingual communication, thus playing a pivotal role in modernizing legislative processes and making them more inclusive. Already, some courts in the US are experimenting with automated speech-to-text tools to address a shortage of court reporters.²²

¹ Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (October 30, 2023)

² Luca Bertuzzi, “OECD updates definition of Artificial Intelligence ‘to inform EU’s AI Act,’” Euractive, November 8, 2023

³ Experts disagree on whether predictive modeling should be considered AI. This report embraces a broad definition to illustrate how the adoption of automated technologies and algorithms in legislative workflows has progressed over time.

⁴ While listed separately here, computer vision is a subset of machine learning.

⁵ Monica Palmirani, Fabio Vitali, Willy Van Puymbroeck, Fernando Nubla Durango, “Legal Drafting in the Era of Artificial Intelligence and Digitisation,” Report to the European Commission (2022)

⁶ CBO’s Policy Growth Model presentation (April 2021)

⁷ Joint Committee on Taxation Frequently Asked Questions

⁸ GAO Innovation Lab ID Verification Controls Simulator

⁹ PolicyEngine Tax-Benefit Reform Economic Impact Calculator

¹⁰ AI at LC

¹¹ Laurie Allen, “Why Experiment: Machine Learning at the Library of Congress,” (November 13, 2023)

¹² Evan D. Peet, Brian G. Vegetabile, Matthew Cefalu, Joseph D. Pane, and Cheryl L. Damberg, “Machine Learning in Public Policy: The Perils and the Promise of Interpretability,” RAND Corporation (2022)

¹³ “House of Representatives’ Comparative Print Suite,” POPVOX Foundation

¹⁴ “Keeping Pace with AI: The Legislative Branch Charges Forward in Second Flash Report,” POPVOX Foundation (October 23, 2023)

¹⁵ “Science, Technology, Assessment & Analytics at GAO,” (September 2022) GAO-22-900426

¹⁶ J. von Lucke, F. Fitsilis, & J. Etscheid, “Research and Development Agenda for the Use of AI in Parliaments.” In Proceedings of the 24th Annual International Conference on Digital Government Research (pp. 423-433) Association for Computing Machinery (2023)

¹⁷ Archives of the European Parliament

¹⁸ “Brazil’s Senate develop a (shareable) app for remote voting,” OECD Observatory of Public Sector Innovation (OPSI) (April 28, 2020)

¹⁹ Rhodri Marsden, “Has voice control finally started speaking our language?” The Guardian (December 4, 2016)

²⁰ Anthony Adragna, “John Fetterman choked up during a hearing discussing how transcription technology changed his life following his stroke,” Politico (September 21, 2023)

²¹ Mini Racker, “Exclusive: John Fetterman is Using This Assistive Technology in the Senate to Help With His Stroke Recovery,” Time (February 1, 2023)

²² Jule Pattison-Gordon, “More Than Stenography: Exploring Court Record Options (Part 1),” Government Technology (December 8, 2023)