The Game Changer: GenAI

Generative AI (GenAI) refers to models capable of creating novel, human-like content including text, images, audio, and video. These include Large Language Models (LLMs) like OpenAI’s GPT tools (including ChatGPT), Google’s Bard, and Anthropic’s Claude for text generation and multimodal models (allowing for photo, video, sound, and other types of non-text input and output) like Stable Diffusion, Midjourney, and DALL-E 2.

Large language models use ML and NLP techniques to ingest enormous amounts of text data, allowing them to “learn” the structure, grammar, and context of human language, allowing them to “predict the next word” with increasing accuracy.

Alberto Mencarelli, a parliamentary official at the Italian Chamber of Deputies, recently highlighted a compelling rationale for the compatibility of legislatures with LLM-enabled tools, given the linguistic foundation of legislative work:

[I]t can be argued, to a broad approximation, that both parliamentary work and the functioning of LLMs have in common the fact that they use language as a key element. [L]inguistic activity (both written and oral) enables parliaments to create and transmit knowledge and rules and to negotiate between different political positions.¹

Mencarelli makes the case that the use of LLMs by legislatures is a natural evolution of these language-based systems.

The release of ChatGPT on November 30, 2022 marked a pivotal moment, propelling the adoption of GenAI tools by non-technical users. By February 2, 2023, ChatGPT had amassed 1 million users, becoming the fastest-growing consumer application in history.²

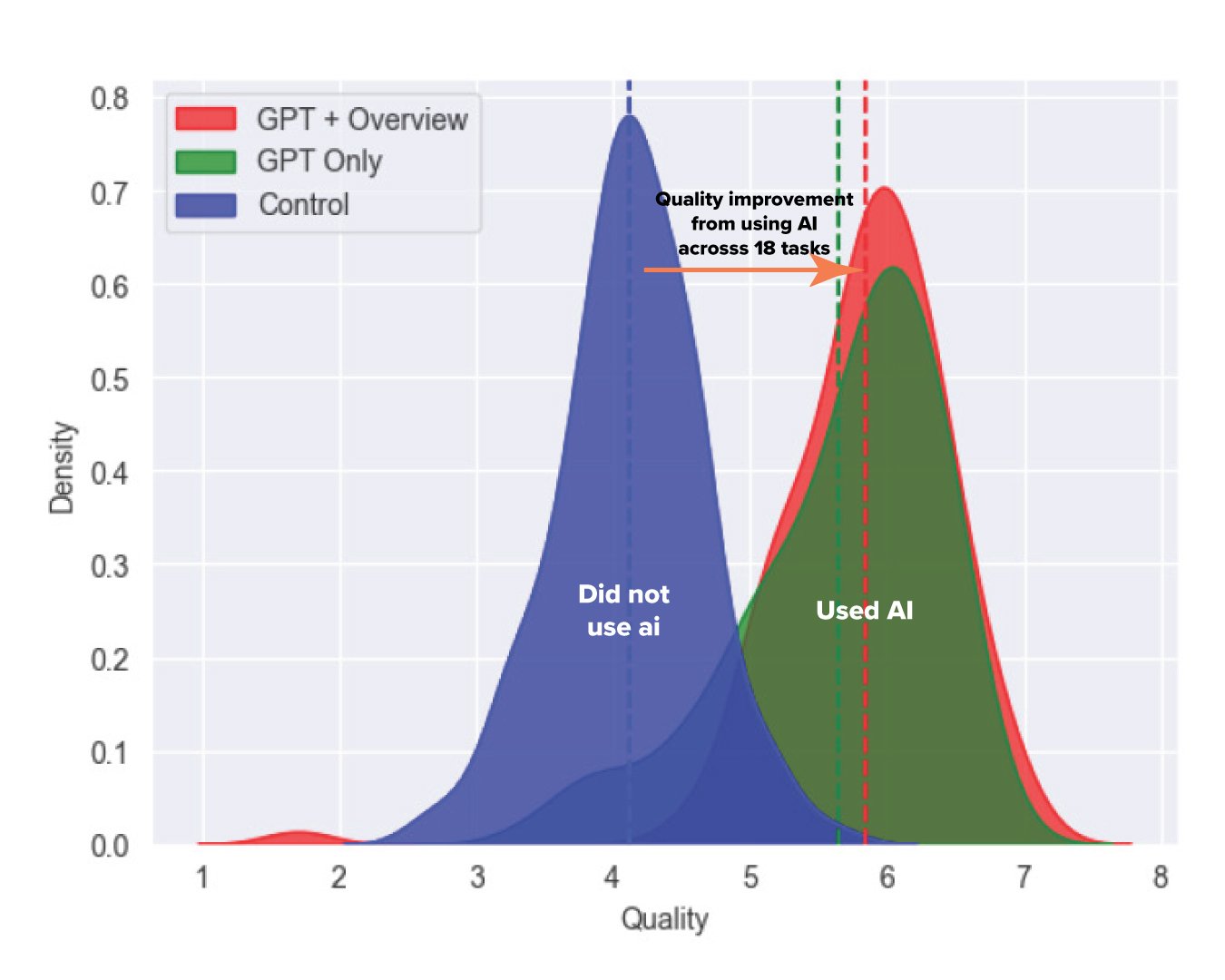

These tools are already reshaping work dynamics, particularly in knowledge-driven fields. A recent study conducted in collaboration with Boston Consulting Group to assess the impact of LLM tools on consultants' productivity found that “consultants using AI finished 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without.”³

Distribution of output quality across all the tasks. The blue group did not use AI, the green and red groups used AI, the red group got some additional training on how to use AI.⁴ Source: Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality

The study's insights are valuable for assessing the potential adoption of these tools within legislative settings, given the similarities in tasks performed by legislative staff and professional consultants. Both roles require the analysis of vast information and making tailored recommendations. Similar to the enhancement in productivity and quality observed among consultants using AI, legislative staff might leverage AI to expedite their research, craft more accurate policy memos, and address constituent inquiries more efficiently. However, legislative bodies must offer sufficient training and establish clear guidelines on AI usage to ensure the technology augments human judgment instead of supplanting or undermining it.

¹ Alberto Mencarelli, “LLMs and parliamentary work: a common conversational architecture,” Substack (August 3, 2023)

² Krystal Hu, “ChatGPT sets record for fastest-growing user base - analyst note,” Reuters (February 1, 2023)

³ Fabrizio Dell’Acqua, et. al, “Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality,” Harvard Business School Technology & Operations Mgt. Unit Working Paper No. 24-013 (September 18, 2023)

⁴ Ethan Mollick, “Centaurs and Cyborgs on the Jagged Frontier,” One Useful Thing Substack (September 16, 2023)