Harnessing AI to Master a New Policy Topic: A Step-by-Step Guide for MPs and Parliamentary Staff

Introduction

AI tools can help you rapidly build expertise in new subject areas, drawing on institutional or government resources and other trusted sources of information. This roadmap provides a 101-level guide for using AI to master a new policy topic. From understanding basic terminology to preparing for policy debate and deliberation, this guide provides sample prompts and recommendations and a list of AI pitfalls to avoid.

Let’s get started!

Important: Before using any of these strategies, review your institution's official guidance regarding the use of GenAI tools. If your institution has not issued guidance, it is a best practice to create and use a paid subscription account when relying on commercially available LLMs. Paid accounts come with additional data security features, which should remain enabled to protect your data privacy.

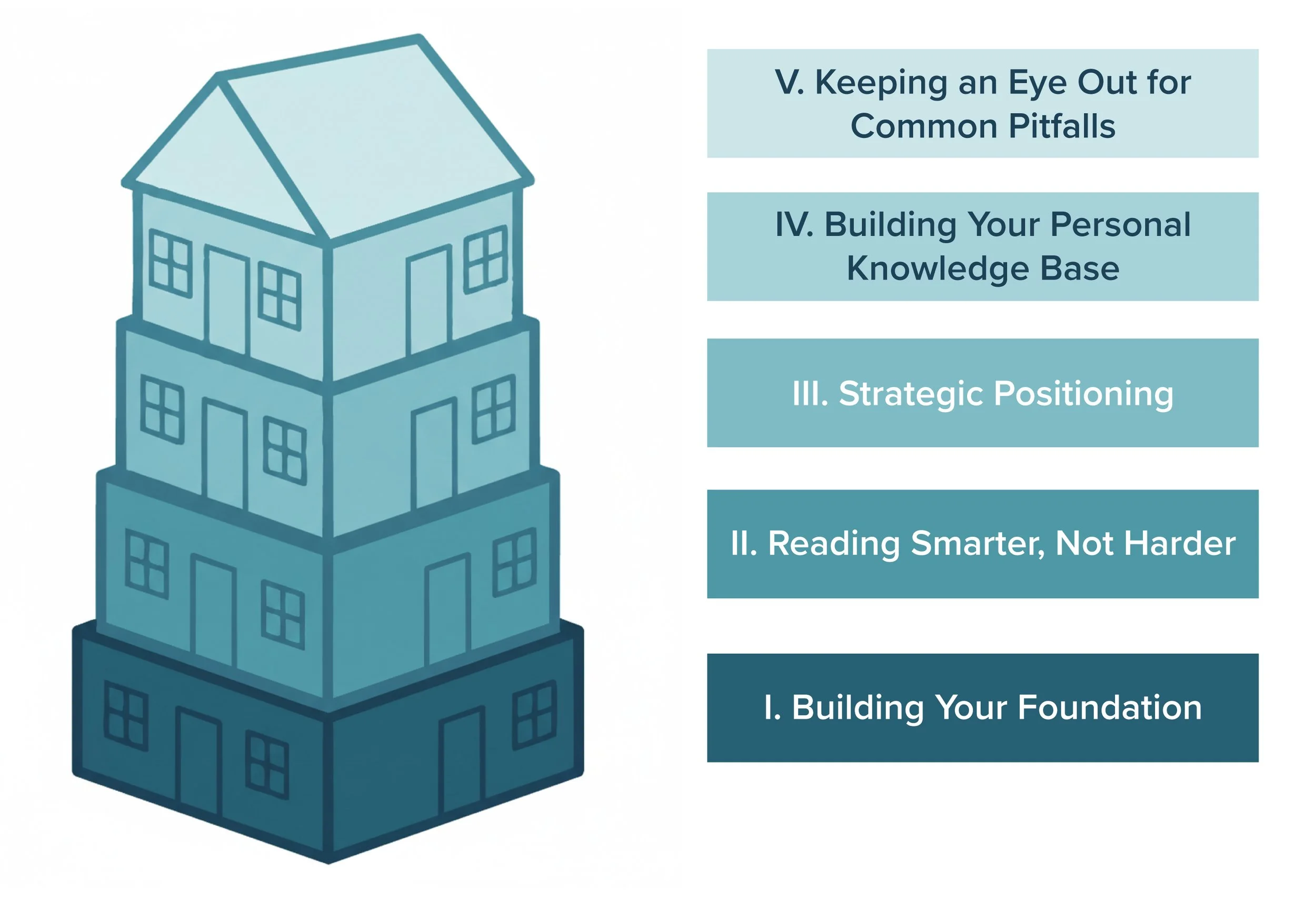

Building Your Policy House

Mastering a new policy area with intention is like building a house.

It works best to start with the foundation (basic concepts and terminology).

Then, you have to frame the structure (understanding key issues and stakeholders).

Next, the walls and roof go up (deep policy knowledge and customized strategies).

Finally, it is time to furnish it (building out specific expertise that is actionable for hearings, legislation, and constituent engagement).

AI can assist at every stage as a tool to support you. And like any tool, it is only as effective as its user.

Stage I: Building Your Foundation

Finding solid ground by not being afraid to ask the "Dumb Questions"

Using AI large language models (LLMs) like OpenAI’s ChatGPT, Anthropic’s Claude, or Mistral AI can help quickly find your footing with basic concepts, key terminology, and fundamental issues without embarrassment.

What This Looks Like

As an MP or staffer, you are used to learning new things every day, but when taking on a new policy portfolio, you will sometimes need answers to questions you might hesitate to ask a colleague or expert. An LLM provides a judgment-free space to build foundational knowledge quickly and to give your research a starting place.

Sample Prompts

Tip: LLMs perform best when you give them generalized context about who you are and why you are asking the question. The more helpful information you provide, the more helpful their support will be.

For Basic Definitions:

"I'm an [MP/staffer] serving in [institution]. I have been assigned to a new committee on [policy area]. I need you to explain [specific term/concept] in plain language. I'm well educated in other policy areas but have zero background in this field. Give me a clear explanation and then tell me why this matters for national policy. Please limit your answer to a three-page memo to start."

For Understanding Acronyms:

"I keep seeing [acronym] in policy documents about [issue area]. What does it stand for, what does it mean, and what do I need to know about it as an [MP/staffer] serving in [institution and country]?"

For Issue Landscape Overview:

"I'm a [MP/staffer] from [district/region type in country]. Help me understand the current federal policy landscape on [issue]. What are the three to four major debates that have taken place since 2020? What are the main points of disagreement between the parties? Who are some of the major special interests and stakeholders in this arena?"

Best Practices

Only use institution-approved LLMs (if guidance exists).

Do not copy-paste LLM-generated information without validation. LLMs are an incredible tool to begin your research and orient yourself. But you must verify key facts through authoritative sources.

Follow-up is your friend. If something is not clear, ask: "Can you explain that more simply?" or "What is a real-world example of this?" If something does not seem right to you, ask it for a source or more information. Validate the information it gives to you. Remember: using an LLM is not the same as doing a Google search. LLMs allow you to have an ongoing dialogue as if you are having a conversation with a research intern.

Garbage In, Garbage Out. How you craft your prompt will greatly influence the quality of the LLM’s response. Provide context (without sharing any classified, sensitive, or personal identifiable information [PII]) and clarify your needs. Prompt crafting takes practice, but it is like a muscle — the more you practice, the stronger your skill set will become.

Stage II: Reading Smarter, Not Harder

Using AI to Digest Research & Policy Documents

Once you get your feet under you with a solid foundation, it is time to dive into the deeper work of extracting key information from lengthy reports, academic papers, government reports, and oversight documents. As an MP or staffer, it is likely your problem will never be a lack of resources to turn to, but rather the ability to find time to wade through the resources to digest the information they have to offer. This stage is one during which AI can be particularly helpful.

What This Looks Like

You will need to consume massive amounts of information quickly. AI can help you identify key takeaways, understand complex arguments, and highlight key points to dig into without the need for you to read every page of every document.

Sample Prompts

For Government Reports:

"I'm a [MP/staffer] working to stay informed on [topic]. I'm attaching a government report on [topic]. I need to understand:

1. The key policy questions this report addresses

2. The main arguments on each side

3. Any data or statistics I should remember

4. Implications for pending legislation

Using only this report as a source, please summarize these four elements in a way that prepares me for a policy briefing."

Tip: After getting the summary, ask follow-up questions like: "What is the strongest argument for/against [position]?" or "Are there any regional differences in how this plays out?" or “What is not addressed in this report that I should look into as a next step in my research?”

For Academic Research Papers:

"I'm a [MP/staffer] working to stay informed on [topic]. I'm attaching an academic study on [topic]. I'm not a researcher, so I need you to help me understand:

- What question were they trying to answer?

- What did they find?

- How strong is the evidence?

- What are the policy implications?

- What from the paper is actionable as a recommendation?

- Are there limitations I should be aware of if I cite this?

Explain this in plain language suitable for a policy memo."

For Publicly Available Oversight Reports:

I'm attaching an oversight report on [topic]. I'm a [MP/staffer] who serves on [committee]. Help me identify:

- The most significant findings

- Any red flags or areas of concern

- Recommendations that were not implemented (and why)

- Potential oversight questions this raises

- Who are the key players mentioned?

Focus on information relevant to parliamentary oversight and the jurisdiction of the committee."

For Interest Group Materials:

"I'm a [MP/staffer] working to stay informed on [topic]. I'm attaching a policy brief from [organization] about [issue]. I know this comes from a specific perspective. Help me:

- Identify the main argument they are making

- Note what evidence they use to support it

- Flag any claims that seem overstated or would need verification

- Identify what arguments from the other side they do not address

I need to evaluate this objectively."

Best Practices

Upload documents directly when using platforms that allow it (ChatGPT+, Claude, etc.). By providing a specific document for the LLM to review and summarize for you, you are limiting its scope of research and focusing its answers to come from the source you provide. This minimizes risk of hallucination or false claims. As a reminder, only upload documents that are publicly available or are nonsensitive.

Read the original source to validate any statistic or claim you plan to use. An LLM is like an assistant who is flagging for you what it thinks is important. It is your responsibility to evaluate the information it provides for you and come to your own conclusions.

Compare perspectives. If your aim is to get a holistic understanding of a policy topic, ask an LLM to compare perspectives by providing it with resources produced by different parties, stakeholders, etc.

Ask "What am I missing?" Explicitly prompt: "What perspectives or arguments are not represented in this document?"

Stage III: Strategic Positioning

Building the Walls and Roof to House Your Member's Leadership Opportunities

The opportunity to become a leader on a unique policy issue is often up for the taking…if you can find it. Brainstorming with an LLM can help an MP or staffer identify a specific policy niche that aligns with their passions and where they can build credibility by having a real impact.

Sample Prompts

Finding Your Policy Lane:

"I'm an MPin the [parliament] from [district type/region]. My background includes [relevant experience]. Within [policy area], I need to identify opportunities where I can:

1. Bring a unique perspective others are not offering

2. Address a district/regional concern that scales to federal policy

3. Build bipartisan credibility

4. Fill a gap in current committee leadership

Analyze the current landscape and suggest three or four specific niches I could own."

Tip: Remember, the more information you provide, the more context the LLM has to go off of to explore ideas and brainstorm with you. For example, you might want to follow the above prompt with “What additional information can I provide for you to improve your brainstorming with me?”

Connecting District Priorities to Policy Leadership:

"I'm a [MP/staffer] and my [district/region] has [specific characteristics: industries, demographics, challenges]. Within [policy area], how can I frame my policy work as directly serving constituent needs? Give me specific angles that connect local impact to federal policy leadership."

Identifying Cross-Cutting Opportunities:

"I'm a [MP/staffer] who works with [committees] and has expressed interest in [policy areas]. Where do these interests intersect? What emerging issues touch multiple areas where [I] could be an early voice?"

Follow-up Prompt Focused on Strategy:

"For [specific opportunity you identified], what would a 6-month leadership plan look like? Include: legislation to introduce or cosponsor, hearings to request, stakeholders to meet with, and regional events to hold."

Best Practices

Include your [or your MP’s] authentic interests. The LLM that you are interacting with doesn’t know you or the MP you work for, or their genuine interests. To get the best responses, you need to provide that context.

Reality-check with leadership. AI might suggest ideas that are impractical politically or procedurally. Once again, it only knows what it knows — this is why humans will always be essential for double checking the work of LLMs (and other generative AI tools) to understand how AI provided information or recommendations fit within the context of real world dynamics and situations.

Think long-term. Like building a sturdy house, building policy credibility takes time. Look for areas with staying power, not just current headlines.

Document your thinking. Keep notes on why certain options were chosen or rejected.

Tool Highlight: Using AI-Powered Deep Research for Comprehensive Analysis

Asking an LLM a question (or prompt) will set in motion an immediate conversation for you to engage in. For general Q&A, brainstorming, or initial research, this rapid response interaction can be very helpful. However, as you begin to dig into very weedy issues requiring a deeper, comprehensive understanding of complex policy issues, implementation challenges, historical context, and stakeholder positions, Deep Research can be a game changer.

What is Deep Research?

Deep Research tools (available in ChatGPT, Claude, Gemini, and Mistral) go beyond single-query responses to conduct multi-step investigations, cross-reference sources, and synthesize findings into comprehensive reports—like assigning a research assistant a week-long project.

See this guide on Understanding AI-Powered Deep Research Tools, including sample prompts.

Stage IV: Building Your Personal Knowledge Base

Using AI to Draft and Refine Policy Ideas

Now that your foundation is set, general knowledge has been built out, research has been undertaken, and key resources have been reviewed, we hope that you are feeling more confident with your new policy portfolio. With that confidence, comes the task to now think through the practical details of policy implementation, identify potential unintended consequences, and draft legislative language as a starting point. AI can help you transform policy concepts into concrete legislative proposals.

Sample Prompts

Stress-Testing Policy Ideas:

"I'm a [MP/staffer] working on a policy proposal to [description]. Help me think through potential problems by identifying:

- Unintended consequences we should plan for

- Implementation challenges at the agency level

- Potential opposition arguments and how to address them

- Cost considerations and whether it requires new funding

- How this interacts with existing law

- Which agencies or programs would be affected

Be tough — I need to identify problems before they are raised in a hearing,markup, or debate."

Drafting Bill Summaries:

"Help me draft a one-page bill summary for [title/topic]. The summary should:

- Explain what problem we are solving (two or three sentences)

- Describe what the bill does (three or four bullet points)

- Note who it helps (with numbers if possible)

- Explain how it is different from current law or other proposals

- Address the cost question

This is for constituent outreach, so keep it in plain language."

Developing Section-by-Section Outlines:

"I need to create a section-by-section outline for legislation on [topic]. The bill should accomplish [goals]. Help me:

- Organize the policy into logical sections

- Identify where we need definitions

- Note where we need to amend existing law (identify specific statutes)

- Flag where we need authorization of appropriations

- Suggest reporting or accountability requirements

This is a preliminary outline. Do not draft legislative language."

Finding Legislative Models:

"I serve as a [MP/staffer] in [parliament]. Research recent legislation introduced in similar sized countries over the past 10 years that address [similar issue or uses similar mechanism]. I need to:

- Identify bill similarities and sucess rates

- Note what approach they took

- Understand what happened to them (passed, failed, reintroduced)

- Extract good language or structures we could adapt

I'm looking for models, not to copy directly."

Best Practices

Do not rely on LLMs for legislative drafting without thorough, dedicated legal analysis. Legal codes are detailed, complicated collections of rules, precedents, and interpretations. Commercially available LLMs are not thoroughly trained on the legal history of your country and are therefore cannot be relied upon to adequately draft legislative language that completely aligns with your or your MP’s legislative intent. Any legislative language drafted by an LLM should undergo thorough human review.

You are responsible for the work that you produce. Although LLMs can save time in creating initial drafts of memos, briefings materials, etc., it is your responsibility to ensure the information, tone, and approach align with your own or your MP’s.

Verify all statutory references. If AI cites a legal code or any reference material, double-check the citations.

Tool Highlight: Using Claude Projects or Custom GPTs for Deep Portfolio Expertise

LLMs provide you with the ability to create a personalized AI assistant that can be trained on a specific knowledge base. In other words, this feature allows you to build a customized bot trained on your curated collection of trusted sources that you can ask questions to. This is helpful because rather than re-uploading documents repeatedly or starting from scratch with each query, you can build a standing resource that knows your specific issue area deeply and responds only based on documents you trust.

See this guide to Creating and Using Custom GPTs.

Use Cases for Custom GPTs or Claude Projects:

Fact-Checking Your Own Understanding:

"Based on the documents in your knowledge base, is it accurate to say that [claim]? If so, which document supports this and where? If not, what is the correct information?"

Comparing Sources:

"I have reports from [Organization A] and [Organization B] in your knowledge base. How do their recommendations on [issue] differ? Where do they agree?"

Finding Specific Information:

"Search the government reports in your knowledge base for any mentions of [specific program issue]. What did they find and when?"

Preparing Talking Points:

"Based on the hearing transcripts in your knowledge base, what questions have witnesses been asked about [topic]? What were the best answers? Help me prepare responses for the upcoming debate."

Historical Context:

"Based on the legislative proposals in your knowledge base, how has thinking evolved on [specific policy question] over time?"

Best Practices

Start small, grow over time. Begin with ten to fifteen essential documents, add more as you go.

Version control matters. Note when documents were uploaded. Update your knowledge base regularly as it will not update on its own similar to the base LLM.

Test it. Ask questions you know the answer to and verify accuracy before relying on it.

Share selectively. If others on your team or colleagues work on this issue, consider making it a shared resource so that it can collectively be tested and maintained.

Keep it updated. As new reports, hearings, and analyses come out, refresh your knowledge base.

Do not upload sensitive information. Only include public documents—never classified information, constituent casework, or internal strategy memos.

Stage V: Keeping an Eye Out for Common Pitfalls

As you experiment using LLMs to support you in your policy role as an MP or staffer supporting an elected official, it is important to be aware of common errors to avoid and remain intentional about the responsible use of these tools. Remember to always act in accordance with any institution-issued guidance and the understanding that you are responsible for the information you use and products you create.

Here are some common pitfalls to watch out for:

1. The "First Draft is Final Draft" Trap

An LLM’s initial response is a starting point or a first draft, not a finished product. Always iterate, review, and double check its work to ensure accuracy and that it matches the voice and beliefs of you or the MP you work for.

2. The Citation Problem

Never cite "ChatGPT" or "Claude" as a source. Trace facts back to original sources and verify them.

3. The Echo Chamber Effect

LLMs reflect patterns in their training data. Actively seek out perspectives it might not surface on its own or upload specific documents directly to focus the LLM’s search resources.

4. The Jargon Trap

AI tools often use more formal/technical language than necessary. Iterate with the LLM to ensure that the content matches your needs and that of your audience.

5. The Timelines Issue

AI knowledge cutoffs mean it may not know about very recent developments. Always verify current statuses of a bill, or cross reference current events by citing back to news articles. If using a CustomGPT, make sure to update the tool’s resource bank routinely.

6. The Confidentiality Breach

When using an LLM in any context (regardless of subscription model or privacy settings), do not input PII, classified information, constituent information, internal strategy, or anything not public.

7. The Over-Reliance Risk

LLMs are a tool to be added to your toolbox. They are not a substitute for your judgment or expertise as either an MP or a staffer. You are responsible for all final products and decisions.

You Are Going to Be Amazing

The goal of this roadmap is to inspire you and empower you with the most cutting edge tools to excel in your work to serve your communities and country at large. Although LLMs can be a force multiplier for your work, it is essential to remember that they are not a replacement for the traditional pathways to expertise. The MPs and staffers who will excel are those who combine technological tools with human judgment, institutional knowledge, and genuine curiosity about the issues.

As you continue in your role, please stay in touch and stay informed on additional use cases and best practices for using LLMs and other GenAI tools in a legislative context by visiting popvox.org/ai.

This guide was developed by POPVOX Foundation to support parliamentarians and their staff in using AI tools responsibly and effectively.

About POPVOX Foundation

POPVOX Foundation is a 501(c)3 nonpartisan nonprofit organization with a mission to help democratic institutions keep pace with a rapidly changing world. Through publications, events, prototypes, and technical assistance, the organization helps public servants and elected officials better serve their constituents and make better policy.

Questions or feedback? Contact us at info@popvox.org